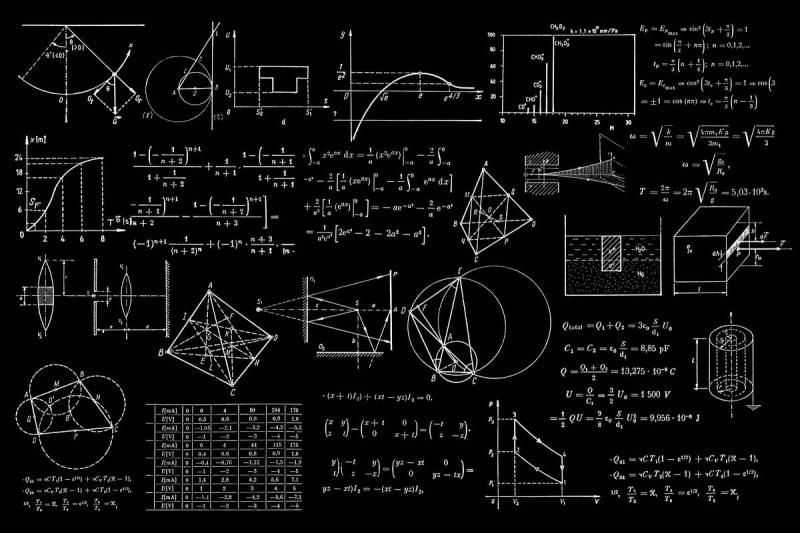

Fundamental Math Theories Needed to Understand AI

Posted on 08 Oct 2024

Mathematical Foundations

Understanding artificial intelligence (AI) requires grasping several key theories that form its base knowledge.

Here are ten essential concepts that will deepen your knowledge of AI and its mechanisms:

-

Curse of Dimensionality

This phenomenon occurs when analyzing data in high-dimensional spaces. As dimensions increase, the volume of the space grows exponentially, making it challenging for algorithms to identify meaningful patterns due to the sparse nature of the data. -

Law of Large Numbers

A cornerstone of statistics, this theorem states that as a sample size grows, its mean will converge to the expected value. This principle assures that larger datasets yield more reliable estimates, making it vital for statistical learning methods. -

Central Limit Theorem

This theorem posits that the distribution of sample means will approach a normal distribution as the sample size increases, regardless of the original distribution. Understanding this concept is crucial for making inferences in machine learning. -

Bayes’ Theorem

A fundamental concept in probability theory, Bayes’ Theorem explains how to update the probability of your belief based on new evidence. It is the backbone of Bayesian inference methods used in AI. -

Overfitting and Underfitting

Overfitting occurs when a model learns the noise in training data, while underfitting happens when a model is too simplistic to capture the underlying patterns. Striking the right balance is essential for effective modeling and performance.